Context-Aware Code Generation

Investigating the impact of environment on LLM code stability.

This project explores how Context—specifically environment configurations and dependency versions—affects the code generated by Large Language Models.

Research Questions

- How do variations in prompt context change the functional correctness of generated code?

- Can we fine-tune models to be “environment-aware,” reducing the rate of build failures and runtime errors in AI-generated software?

This work builds upon my recent findings regarding the instability of AI-enabled systems under different environment configurations.

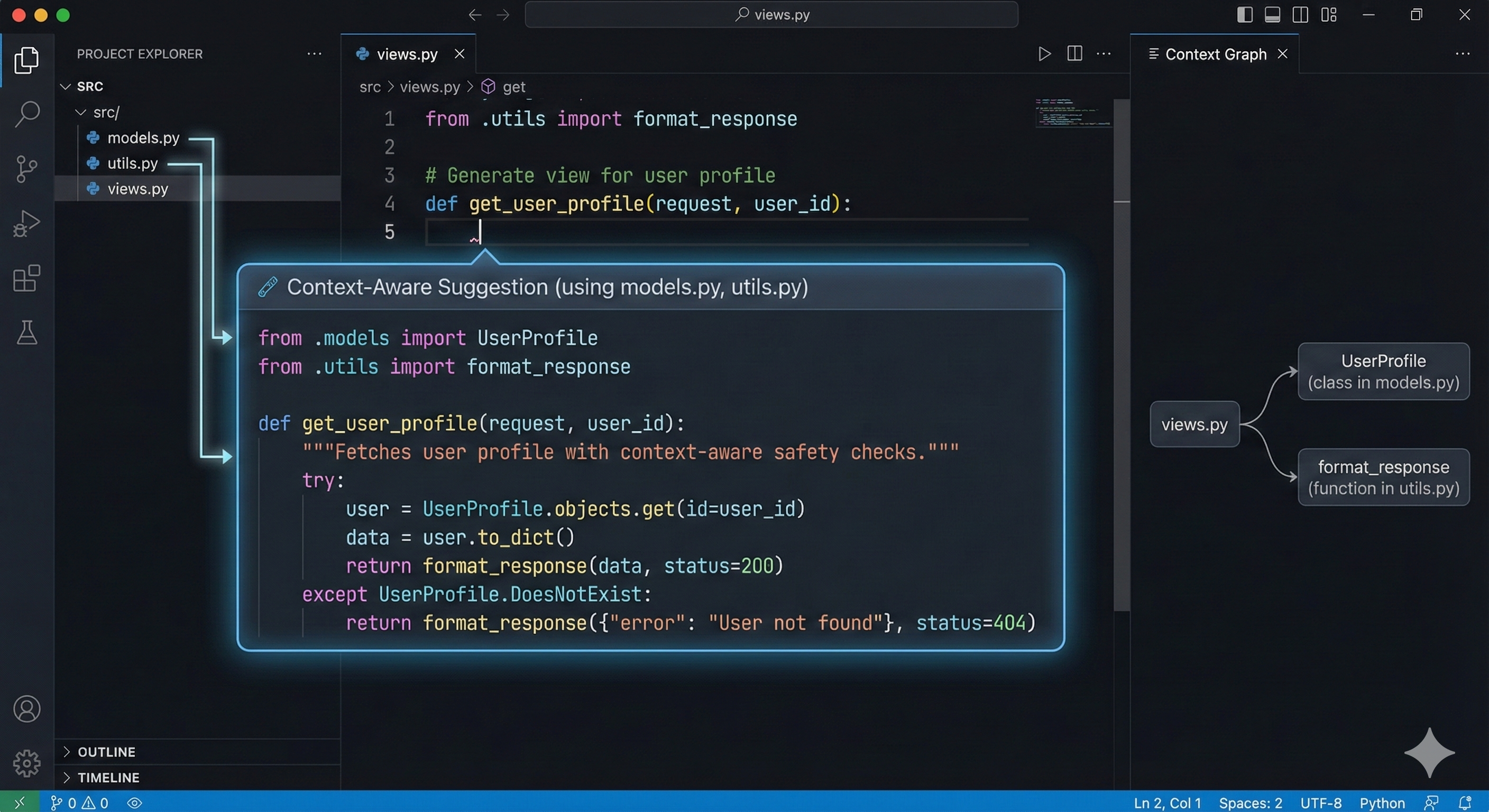

A demonstration of context-aware code generation, where the model uses definitions from existing project files (

models.py, utils.py) to provide a correct and complete suggestion.